The most challenged and ambiguous thing I have ever encountered in testing is getting the Quality Metrics and sharing them with Stake holders ( everyone who has the ownership in project, includes Developers, QA, PM). Setting up the parameter always enable in high performance delivery among the team. So, after year of experience I’ve customise the high-level metrics gathering with some defined parameter which help me delivering the best.

I prefer the results driven metrics and I’ve divided getting the quality metrics into 3 levels

- Level 1: The Core

- Test run summary: It serves as the “Quality Gates” for the application, the prime objective of this document is to explain various details and activities about the Testing performed for the Project

-

- Defect + Status + Priority + Severity : This help us in understanding, what should be prioritise

| ID | Status | Priority | Severity |

| # | Open | Critical | High |

| # | Re-Open | Major | Low |

- Leve2: Required

- Execution happening on Week / Sprint basis: This helps in aligning and understanding the better utilisation of resources

-

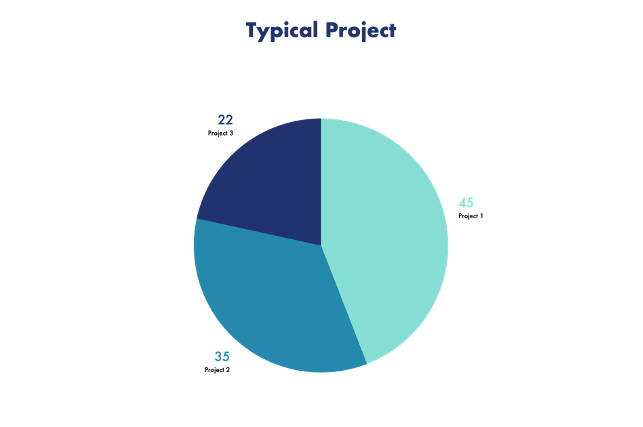

- Results per requirement : Test which we have and what requirement is it coveringFor ex. The 90 % of my testing revolves around Requirement 1 and only 5% of my testing revolves around Requirement 2 and Requirement 3. This helps in understanding are we over testing and under testing. This helps us understanding testing back to the requirement.

| Requirement | Results |

| Requirement 1 | 90 % |

| Requirement 2 | 5 % |

| Requirement 3 | 5 % |

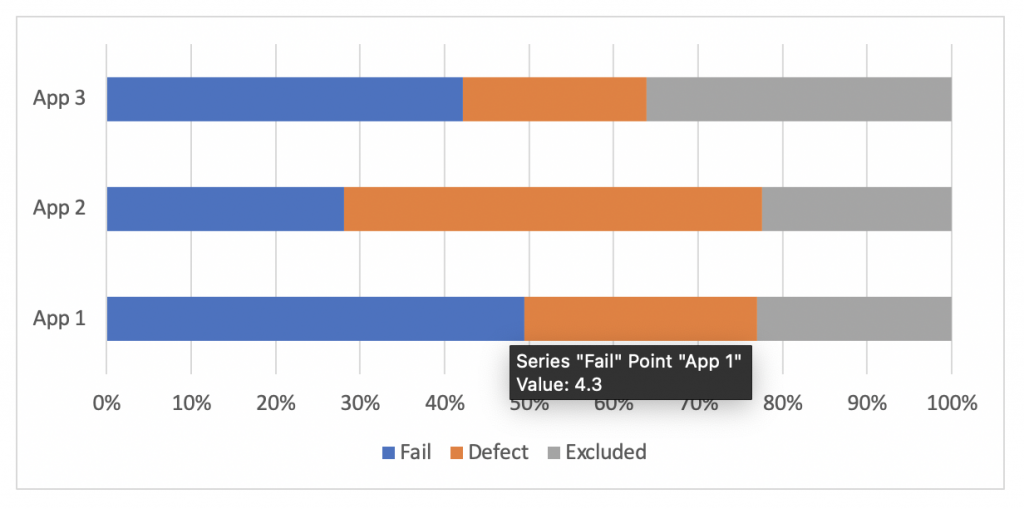

- Defect Density: This helps in understanding the focus area, which application is having the maximum defect and what should be the point of testing.

- Level 3: Something Extra

- Manual vs Automated: If any test from manual can be moved to automated, not all test can be automated. Can create the automated smoke test suite. What test have more value as per business perspective?

- Last Run: Keeping the log of the run, help identifying wether to keep the test or not, during development I’ve seen sometime due to change in requirement entire workflow changes or get updated. Keeping the last run give us the information about what can be done to make our test suite better.

-

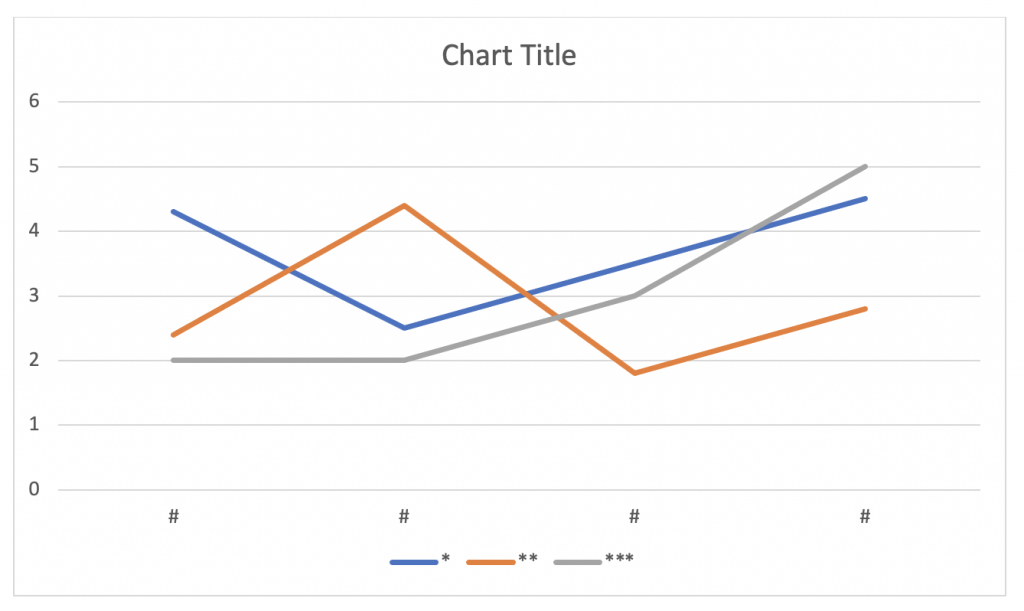

- Flapping: It means running the same test run on different machine, variable, environment, browsers. It fails with one environment but passes with another environment. We can always go back and check, is this written correctly or is anything changed, does environment and variable we have is it effecting the execution. This can be captured on “Result driven graph”. This help in identifying the issue with test case, source code or maybe there is extra problem with requirement.